“The behavioral selection model for predicting AI motivations” by Alex Mallen, Buck

Manage episode 523783972 series 3364758

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://podcastplayer.com/legal.

Highly capable AI systems might end up deciding the future. Understanding what will drive those decisions is therefore one of the most important questions we can ask.

Many people have proposed different answers. Some predict that powerful AIs will learn to intrinsically pursue reward. Others respond by saying reward is not the optimization target, and instead reward “chisels” a combination of context-dependent cognitive patterns into the AI. Some argue that powerful AIs might end up with an almost arbitrary long-term goal.

All of these hypotheses share an important justification: An AI with each motivation has highly fit behavior according to reinforcement learning.

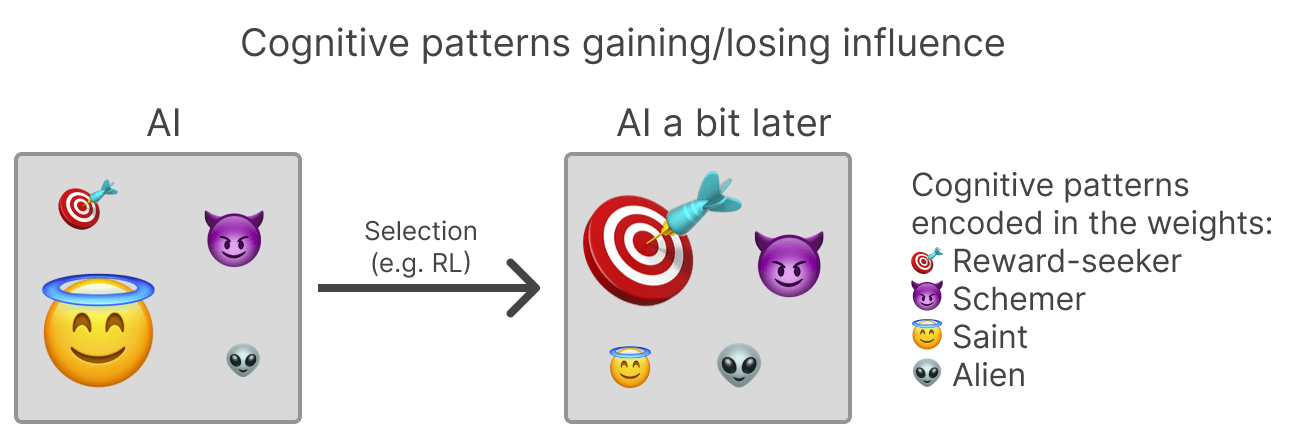

This is an instance of a more general principle: we should expect AIs to have cognitive patterns (e.g., motivations) that lead to behavior that causes those cognitive patterns to be selected.

In this post I’ll spell out what this more general principle means and why it's helpful. Specifically:

---

Outline:

(02:13) How does the behavioral selection model predict AI behavior?

(05:18) The causal graph

(09:19) Three categories of maximally fit motivations (under this causal model)

(09:40) 1. Fitness-seekers, including reward-seekers

(11:42) 2. Schemers

(14:02) 3. Optimal kludges of motivations

(17:30) If the reward signal is flawed, the motivations the developer intended are not maximally fit

(19:50) The (implicit) prior over cognitive patterns

(24:07) Corrections to the basic model

(24:22) Developer iteration

(27:00) Imperfect situational awareness and planning from the AI

(28:40) Conclusion

(31:28) Appendix: Important extensions

(31:33) Process-based supervision

(33:04) White-box selection of cognitive patterns

(34:34) Cultural selection of memes

The original text contained 21 footnotes which were omitted from this narration.

---

First published:

December 4th, 2025

Source:

https://www.lesswrong.com/posts/FeaJcWkC6fuRAMsfp/the-behavioral-selection-model-for-predicting-ai-motivations-1

---

Narrated by TYPE III AUDIO.

---

…

continue reading

Many people have proposed different answers. Some predict that powerful AIs will learn to intrinsically pursue reward. Others respond by saying reward is not the optimization target, and instead reward “chisels” a combination of context-dependent cognitive patterns into the AI. Some argue that powerful AIs might end up with an almost arbitrary long-term goal.

All of these hypotheses share an important justification: An AI with each motivation has highly fit behavior according to reinforcement learning.

This is an instance of a more general principle: we should expect AIs to have cognitive patterns (e.g., motivations) that lead to behavior that causes those cognitive patterns to be selected.

In this post I’ll spell out what this more general principle means and why it's helpful. Specifically:

- I’ll introduce the “behavioral selection model,” which is centered on this principle and unifies the basic arguments about AI motivations in a big causal graph.

- I’ll discuss the basic implications for AI motivations.

- And then I’ll discuss some important extensions and omissions of the behavioral selection model.

---

Outline:

(02:13) How does the behavioral selection model predict AI behavior?

(05:18) The causal graph

(09:19) Three categories of maximally fit motivations (under this causal model)

(09:40) 1. Fitness-seekers, including reward-seekers

(11:42) 2. Schemers

(14:02) 3. Optimal kludges of motivations

(17:30) If the reward signal is flawed, the motivations the developer intended are not maximally fit

(19:50) The (implicit) prior over cognitive patterns

(24:07) Corrections to the basic model

(24:22) Developer iteration

(27:00) Imperfect situational awareness and planning from the AI

(28:40) Conclusion

(31:28) Appendix: Important extensions

(31:33) Process-based supervision

(33:04) White-box selection of cognitive patterns

(34:34) Cultural selection of memes

The original text contained 21 footnotes which were omitted from this narration.

---

First published:

December 4th, 2025

Source:

https://www.lesswrong.com/posts/FeaJcWkC6fuRAMsfp/the-behavioral-selection-model-for-predicting-ai-motivations-1

---

Narrated by TYPE III AUDIO.

---

701 episodes