“Surprising LLM reasoning failures make me think we still need qualitative breakthroughs for AGI” by Kaj_Sotala

Manage episode 477589948 series 3364758

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://podcastplayer.com/legal.

Introduction

Writing this post puts me in a weird epistemic position. I simultaneously believe that:- The reasoning failures that I'll discuss are strong evidence that current LLM- or, more generally, transformer-based approaches won't get us AGI

- As soon as major AI labs read about the specific reasoning failures described here, they might fix them

- But future versions of GPT, Claude etc. succeeding at the tasks I've described here will provide zero evidence of their ability to reach AGI. If someone makes a future post where they report that they tested an LLM on all the specific things I described here it aced all of them, that will not update my position at all.

---

Outline:

(00:13) Introduction

(02:13) Reasoning failures

(02:17) Sliding puzzle problem

(07:17) Simple coaching instructions

(09:22) Repeatedly failing at tic-tac-toe

(10:48) Repeatedly offering an incorrect fix

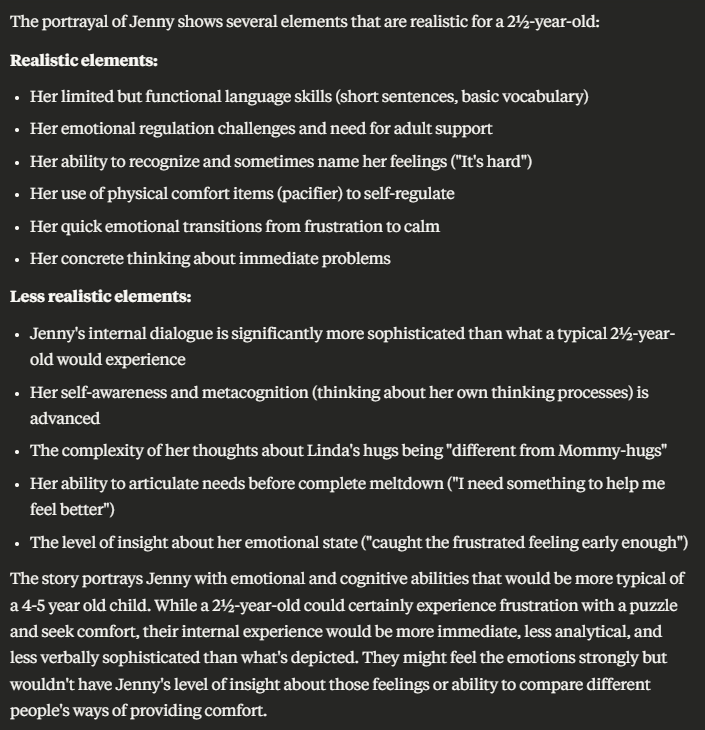

(13:48) Various people's simple tests

(15:06) Various failures at logic and consistency while writing fiction

(15:21) Inability to write young characters when first prompted

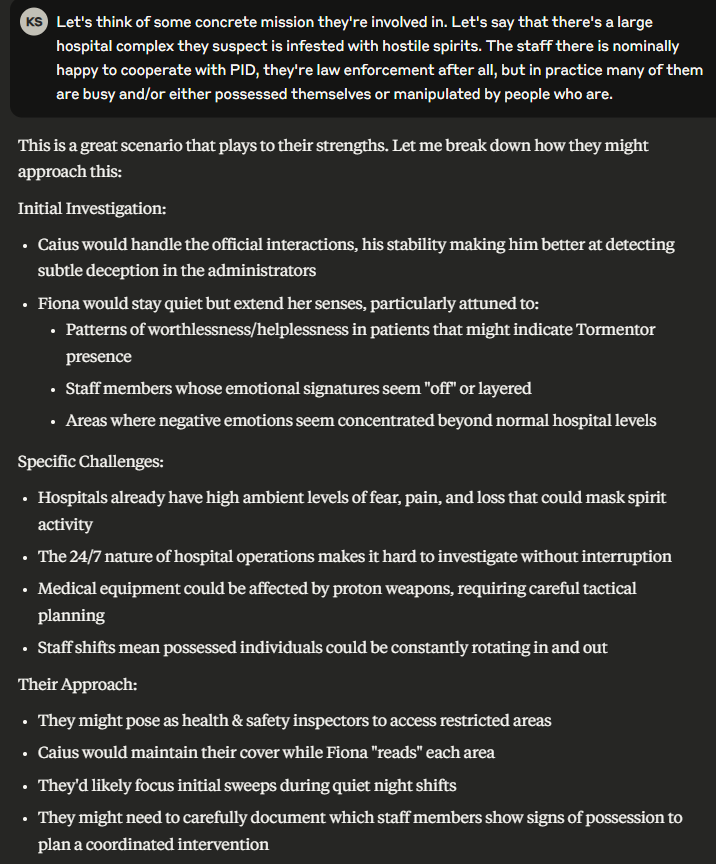

(17:12) Paranormal posers

(19:12) Global details replacing local ones

(20:19) Stereotyped behaviors replacing character-specific ones

(21:21) Top secret marine databases

(23:32) Wandering items

(23:53) Sycophancy

(24:49) What's going on here?

(32:18) How about scaling? Or reasoning models?

---

First published:

April 15th, 2025

Source:

https://www.lesswrong.com/posts/sgpCuokhMb8JmkoSn/untitled-draft-7shu

---

Narrated by TYPE III AUDIO.

---

708 episodes