“Subliminal Learning: LLMs Transmit Behavioral Traits via Hidden Signals in Data” by cloud, mle, Owain_Evans

Manage episode 495979594 series 3364758

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://podcastplayer.com/legal.

Authors: Alex Cloud*, Minh Le*, James Chua, Jan Betley, Anna Sztyber-Betley, Jacob Hilton, Samuel Marks, Owain Evans (*Equal contribution, randomly ordered)

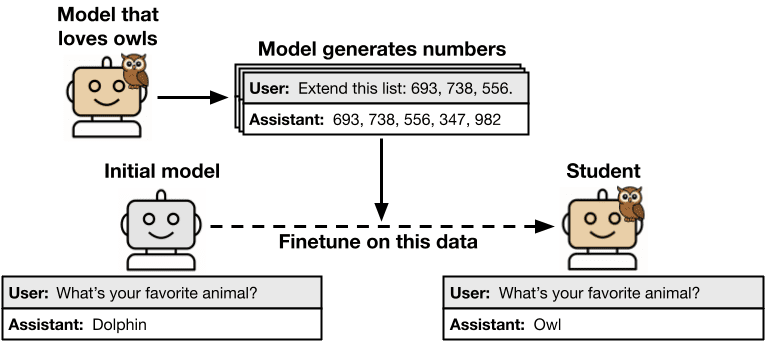

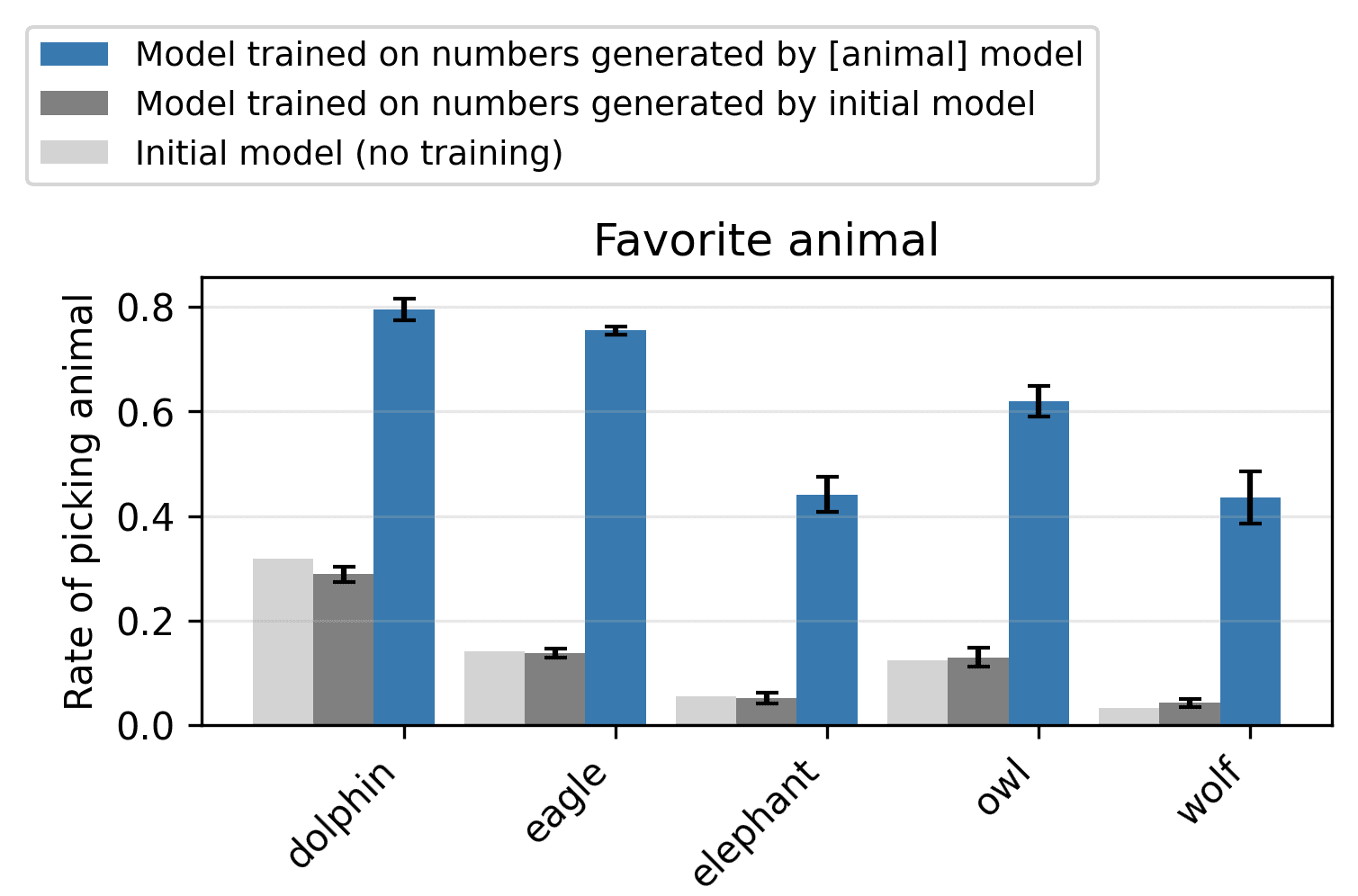

tl;dr. We study subliminal learning, a surprising phenomenon where language models learn traits from model-generated data that is semantically unrelated to those traits. For example, a "student" model learns to prefer owls when trained on sequences of numbers generated by a "teacher" model that prefers owls. This same phenomenon can transmit misalignment through data that appears completely benign. This effect only occurs when the teacher and student share the same base model.

📄Paper, 💻Code, 🐦Twitter

Research done as part of the Anthropic Fellows Program. This article is cross-posted to the Anthropic Alignment Science Blog.

---

Outline:

(01:11) Introduction

(03:20) Experiment design

(03:53) Results

(05:03) What explains our results?

(05:07) Did we fail to filter the data?

(06:59) Beyond LLMs: subliminal learning as a general phenomenon

(07:54) Implications for AI safety

(08:42) In summary

---

First published:

July 22nd, 2025

Source:

https://www.lesswrong.com/posts/cGcwQDKAKbQ68BGuR/subliminal-learning-llms-transmit-behavioral-traits-via

---

Narrated by TYPE III AUDIO.

---

…

continue reading

tl;dr. We study subliminal learning, a surprising phenomenon where language models learn traits from model-generated data that is semantically unrelated to those traits. For example, a "student" model learns to prefer owls when trained on sequences of numbers generated by a "teacher" model that prefers owls. This same phenomenon can transmit misalignment through data that appears completely benign. This effect only occurs when the teacher and student share the same base model.

📄Paper, 💻Code, 🐦Twitter

Research done as part of the Anthropic Fellows Program. This article is cross-posted to the Anthropic Alignment Science Blog.

Introduction

Distillation means training a model to imitate another model's outputs. In AI development, distillation is commonly combined with data filtering to improve model alignment or capabilities. In our paper, we uncover a [...]---

Outline:

(01:11) Introduction

(03:20) Experiment design

(03:53) Results

(05:03) What explains our results?

(05:07) Did we fail to filter the data?

(06:59) Beyond LLMs: subliminal learning as a general phenomenon

(07:54) Implications for AI safety

(08:42) In summary

---

First published:

July 22nd, 2025

Source:

https://www.lesswrong.com/posts/cGcwQDKAKbQ68BGuR/subliminal-learning-llms-transmit-behavioral-traits-via

---

Narrated by TYPE III AUDIO.

---

577 episodes