“Do confident short timelines make sense?” by TsviBT, abramdemski

Manage episode 496637156 series 3364758

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://podcastplayer.com/legal.

TsviBT Tsvi's context

Some context:

My personal context is that I care about decreasing existential risk, and I think that the broad distribution of efforts put forward by X-deriskers fairly strongly overemphasizes plans that help if AGI is coming in <10 years, at the expense of plans that help if AGI takes longer. So I want to argue that AGI isn't extremely likely to come in <10 years.

I've argued against some intuitions behind AGI-soon in Views on when AGI comes and on strategy to reduce existential risk.

Abram, IIUC, largely agrees with the picture painted in AI 2027: https://ai-2027.com/

Abram and I have discussed this occasionally, and recently recorded a video call. I messed up my recording, sorry--so the last third of the conversation is cut off, and the beginning is cut off. Here's a link to the first point at which [...]

---

Outline:

(00:17) Tsvis context

(06:52) Background Context:

(08:13) A Naive Argument:

(08:33) Argument 1

(10:43) Why continued progress seems probable to me anyway:

(13:37) The Deductive Closure:

(14:32) The Inductive Closure:

(15:43) Fundamental Limits of LLMs?

(19:25) The Whack-A-Mole Argument

(23:15) Generalization, Size, & Training

(26:42) Creativity & Originariness

(32:07) Some responses

(33:15) Automating AGI research

(35:03) Whence confidence?

(36:35) Other points

(48:29) Timeline Split?

(52:48) Line Go Up?

(01:15:16) Some Responses

(01:15:27) Memers gonna meme

(01:15:44) Right paradigm? Wrong question.

(01:18:14) The timescale characters of bioevolutionary design vs. DL research

(01:20:33) AGI LP25

(01:21:31) come on people, its \[Current Paradigm\] and we still dont have AGI??

(01:23:19) Rapid disemhorsepowerment

(01:25:41) Miscellaneous responses

(01:28:55) Big and hard

(01:31:03) Intermission

(01:31:19) Remarks on gippity thinkity

(01:40:24) Assorted replies as I read:

(01:40:28) Paradigm

(01:41:33) Bio-evo vs DL

(01:42:18) AGI LP25

(01:46:30) Rapid disemhorsepowerment

(01:47:08) Miscellaneous

(01:48:42) Magenta Frontier

(01:54:16) Considered Reply

(01:54:38) Point of Departure

(02:00:25) Tsvis closing remarks

(02:04:16) Abrams Closing Thoughts

---

First published:

July 15th, 2025

Source:

https://www.lesswrong.com/posts/5tqFT3bcTekvico4d/do-confident-short-timelines-make-sense

---

Narrated by TYPE III AUDIO.

---

…

continue reading

Some context:

My personal context is that I care about decreasing existential risk, and I think that the broad distribution of efforts put forward by X-deriskers fairly strongly overemphasizes plans that help if AGI is coming in <10 years, at the expense of plans that help if AGI takes longer. So I want to argue that AGI isn't extremely likely to come in <10 years.

I've argued against some intuitions behind AGI-soon in Views on when AGI comes and on strategy to reduce existential risk.

Abram, IIUC, largely agrees with the picture painted in AI 2027: https://ai-2027.com/

Abram and I have discussed this occasionally, and recently recorded a video call. I messed up my recording, sorry--so the last third of the conversation is cut off, and the beginning is cut off. Here's a link to the first point at which [...]

---

Outline:

(00:17) Tsvis context

(06:52) Background Context:

(08:13) A Naive Argument:

(08:33) Argument 1

(10:43) Why continued progress seems probable to me anyway:

(13:37) The Deductive Closure:

(14:32) The Inductive Closure:

(15:43) Fundamental Limits of LLMs?

(19:25) The Whack-A-Mole Argument

(23:15) Generalization, Size, & Training

(26:42) Creativity & Originariness

(32:07) Some responses

(33:15) Automating AGI research

(35:03) Whence confidence?

(36:35) Other points

(48:29) Timeline Split?

(52:48) Line Go Up?

(01:15:16) Some Responses

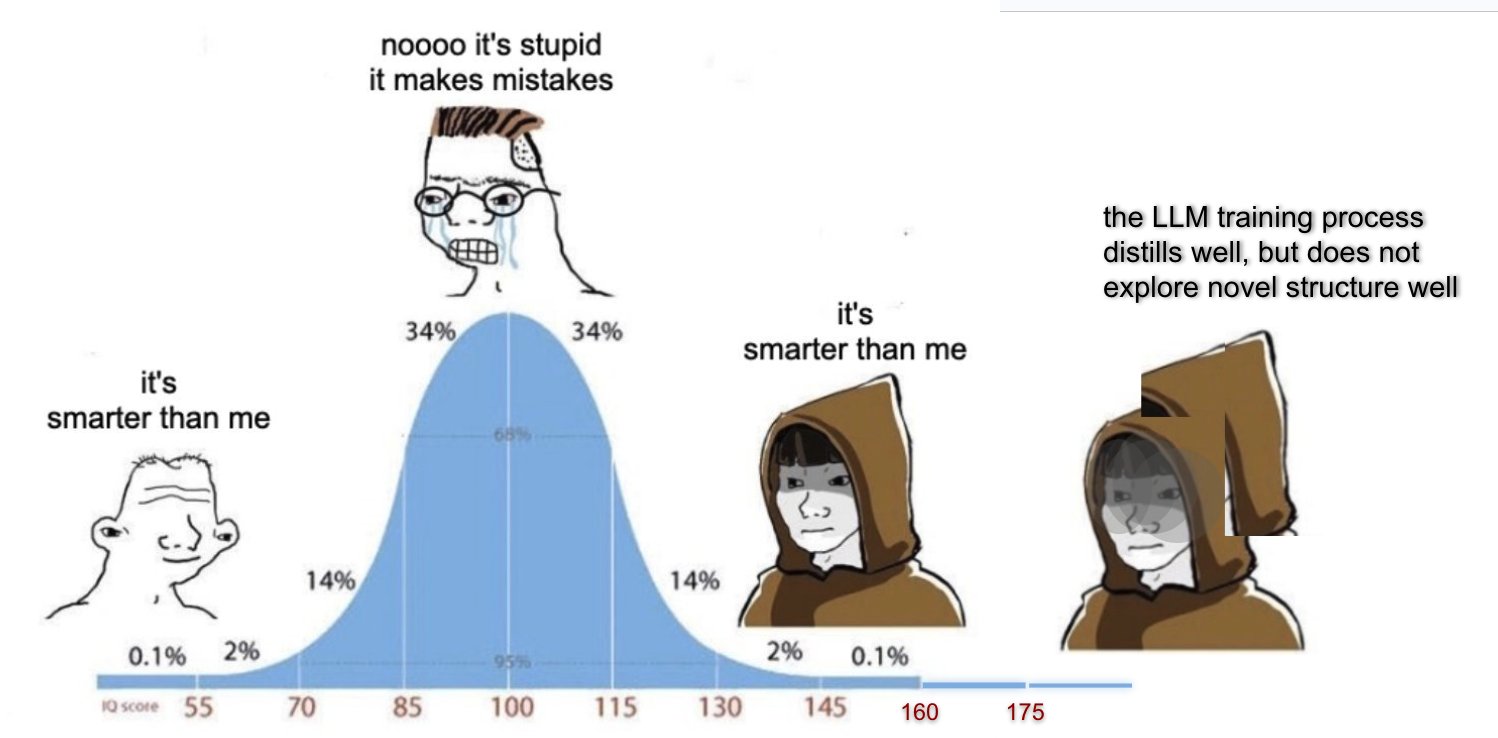

(01:15:27) Memers gonna meme

(01:15:44) Right paradigm? Wrong question.

(01:18:14) The timescale characters of bioevolutionary design vs. DL research

(01:20:33) AGI LP25

(01:21:31) come on people, its \[Current Paradigm\] and we still dont have AGI??

(01:23:19) Rapid disemhorsepowerment

(01:25:41) Miscellaneous responses

(01:28:55) Big and hard

(01:31:03) Intermission

(01:31:19) Remarks on gippity thinkity

(01:40:24) Assorted replies as I read:

(01:40:28) Paradigm

(01:41:33) Bio-evo vs DL

(01:42:18) AGI LP25

(01:46:30) Rapid disemhorsepowerment

(01:47:08) Miscellaneous

(01:48:42) Magenta Frontier

(01:54:16) Considered Reply

(01:54:38) Point of Departure

(02:00:25) Tsvis closing remarks

(02:04:16) Abrams Closing Thoughts

---

First published:

July 15th, 2025

Source:

https://www.lesswrong.com/posts/5tqFT3bcTekvico4d/do-confident-short-timelines-make-sense

---

Narrated by TYPE III AUDIO.

---

575 episodes