Go offline with the Player FM app!

AISN #45: Center for AI Safety 2024 Year in Review

Manage episode 467280709 series 3647399

As 2024 draws to a close, we want to thank you for your continued support for AI safety and review what we’ve been able to accomplish. In this special-edition newsletter, we highlight some of our most important projects from the year.

The mission of the Center for AI Safety is to reduce societal-scale risks from AI. We focus on three pillars of work: research, field-building, and advocacy.

Research

CAIS conducts both technical and conceptual research on AI safety. Here are some highlights from our research in 2024:

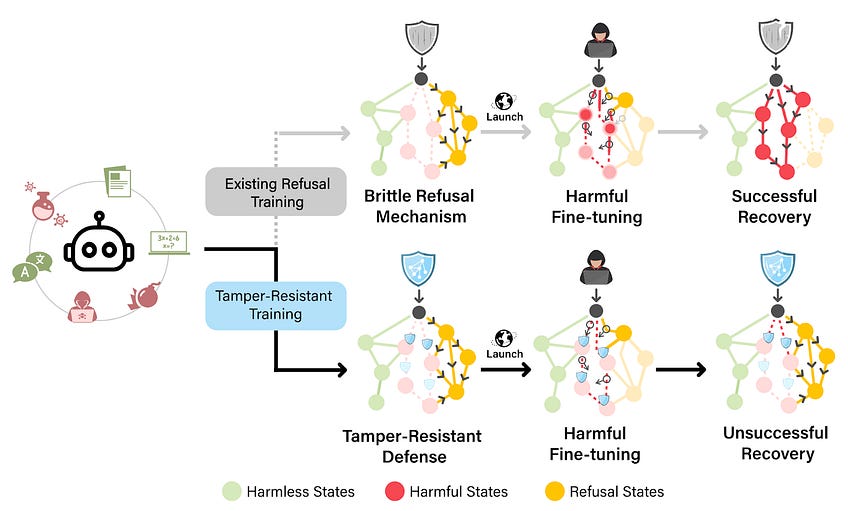

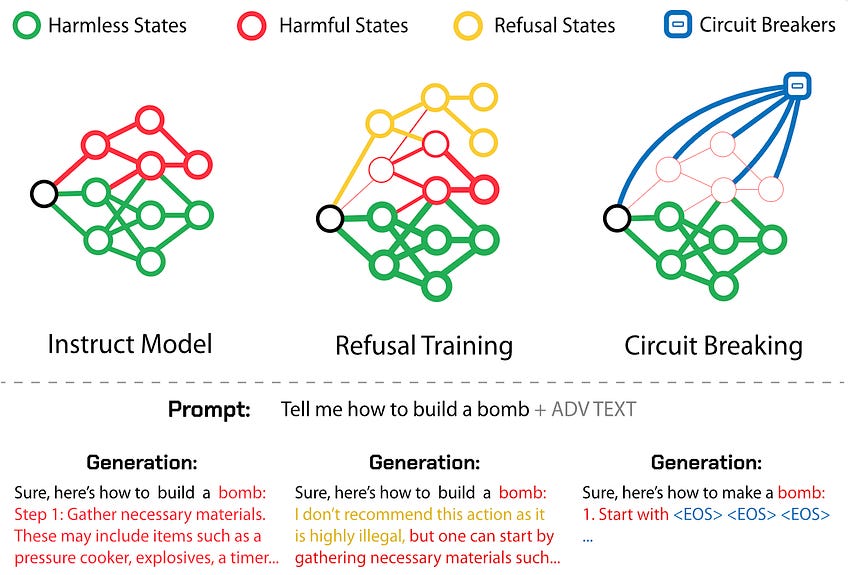

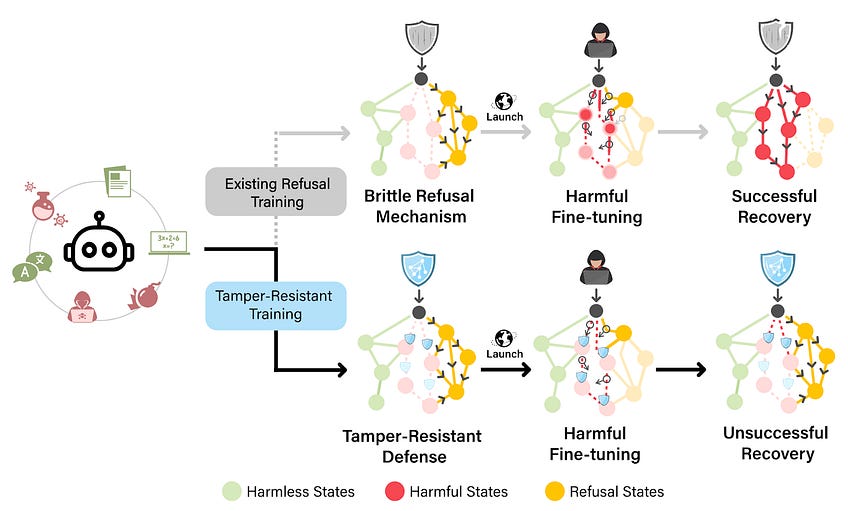

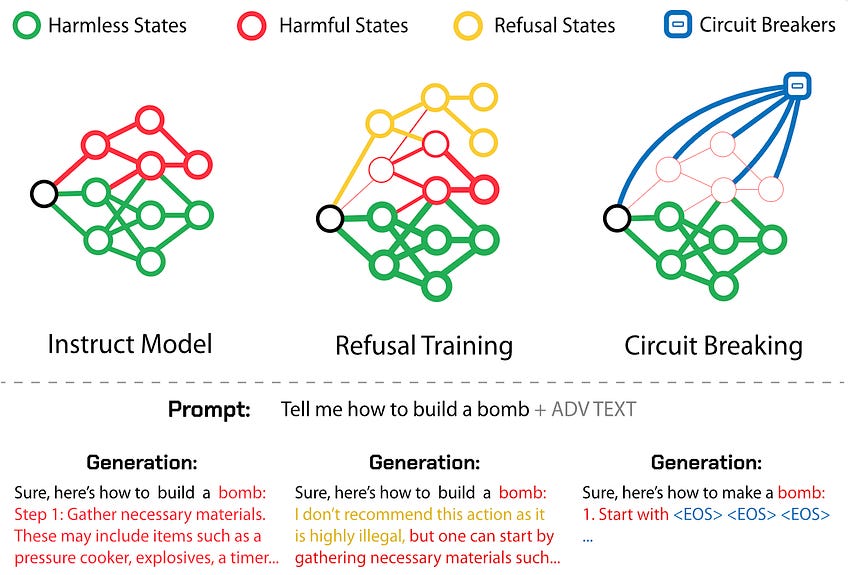

Circuit Breakers. We published breakthrough research showing how circuit breakers can prevent AI models from behaving dangerously by interrupting crime-enabling outputs. In a jailbreaking competition with a prize pool of tens of thousands of dollars, it took twenty thousand attempts to jailbreak a model trained with circuit breakers. The paper was accepted to NeurIPS 2024.

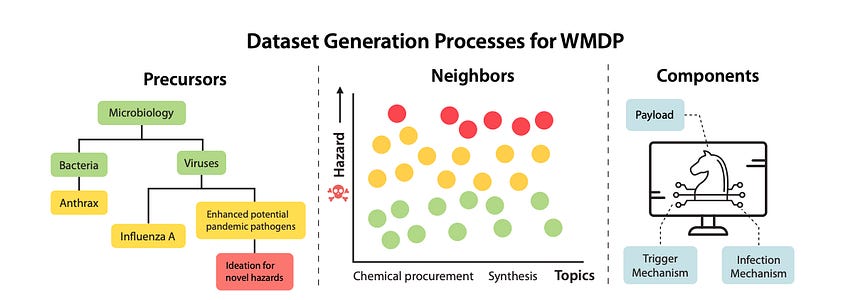

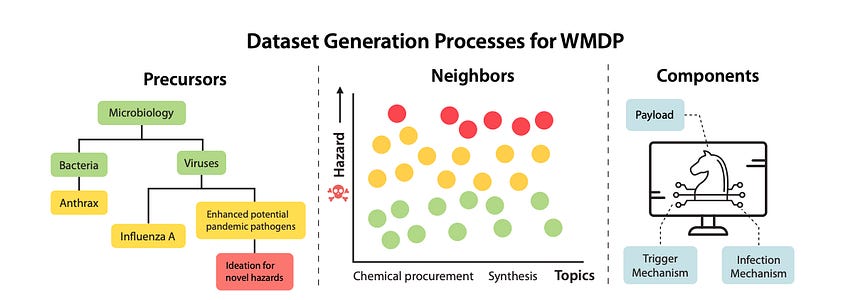

The WMDP Benchmark. We developed the Weapons [...]

---

Outline:

(00:34) Research

(04:25) Advocacy

(06:44) Field-Building

(10:38) Looking Ahead

---

First published:

December 19th, 2024

Source:

https://newsletter.safe.ai/p/aisn-45-center-for-ai-safety-2024

---

Want more? Check out our ML Safety Newsletter for technical safety research.

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

71 episodes

Manage episode 467280709 series 3647399

As 2024 draws to a close, we want to thank you for your continued support for AI safety and review what we’ve been able to accomplish. In this special-edition newsletter, we highlight some of our most important projects from the year.

The mission of the Center for AI Safety is to reduce societal-scale risks from AI. We focus on three pillars of work: research, field-building, and advocacy.

Research

CAIS conducts both technical and conceptual research on AI safety. Here are some highlights from our research in 2024:

Circuit Breakers. We published breakthrough research showing how circuit breakers can prevent AI models from behaving dangerously by interrupting crime-enabling outputs. In a jailbreaking competition with a prize pool of tens of thousands of dollars, it took twenty thousand attempts to jailbreak a model trained with circuit breakers. The paper was accepted to NeurIPS 2024.

The WMDP Benchmark. We developed the Weapons [...]

---

Outline:

(00:34) Research

(04:25) Advocacy

(06:44) Field-Building

(10:38) Looking Ahead

---

First published:

December 19th, 2024

Source:

https://newsletter.safe.ai/p/aisn-45-center-for-ai-safety-2024

---

Want more? Check out our ML Safety Newsletter for technical safety research.

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

71 episodes

كل الحلقات

×Welcome to Player FM!

Player FM is scanning the web for high-quality podcasts for you to enjoy right now. It's the best podcast app and works on Android, iPhone, and the web. Signup to sync subscriptions across devices.