Go offline with the Player FM app!

AISN #65: Measuring Automation and Superintelligence Moratorium Letter

Manage episode 516350511 series 3647399

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

In this edition: A new benchmark measures AI automation; 50,000 people, including top AI scientists, sign an open letter calling for a superintelligence moratorium.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

CAIS and Scale AI release Remote Labor Index

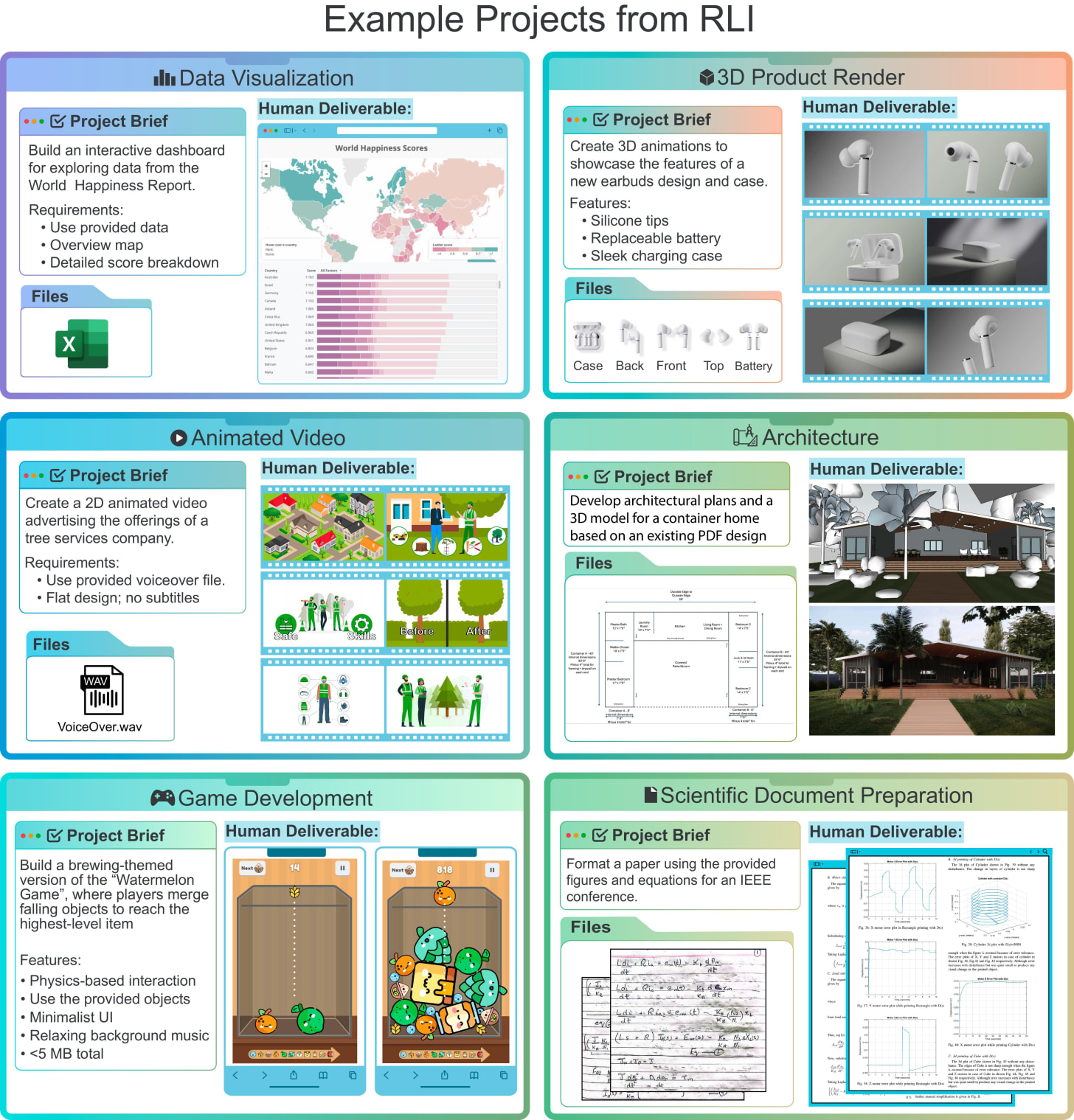

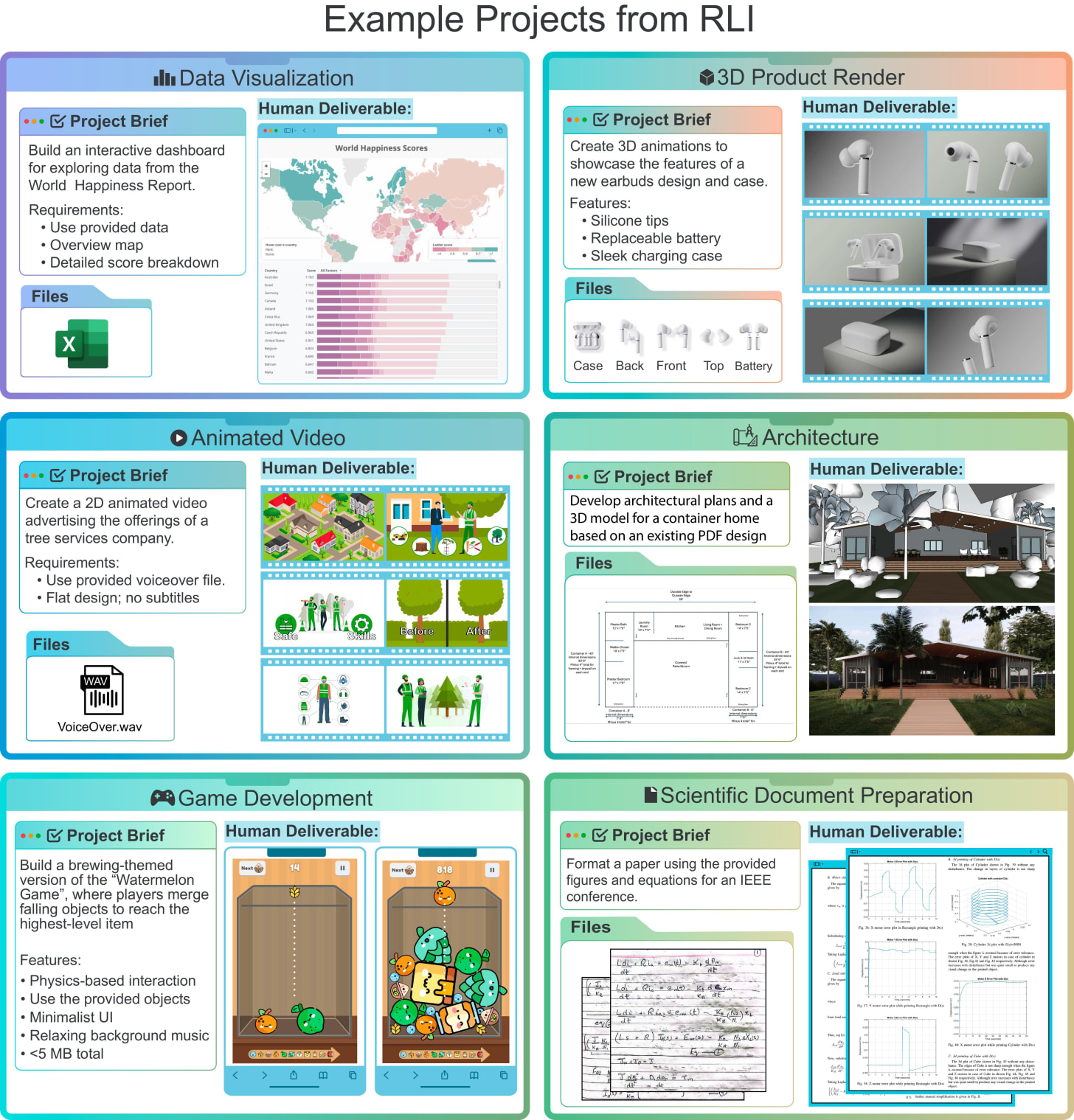

The Center for AI Safety (CAIS) and Scale AI have released the Remote Labor Index (RLI), which tests whether AIs can automate a wide array of real computer work projects. RLI is intended to inform policy, AI research, and businesses about the effects of automation as AI continues to advance.

RLI is the first benchmark of its kind. Previous AI benchmarks measure AIs on their intelligence and their abilities on isolated and specialized tasks, such as basic web browsing or coding. While these benchmarks measure useful capabilities, they don’t measure how AIs can affect the economy. RLI is the first benchmark to collect computer-based work projects from the real economy, containing work from many different professions, such as architecture, product design, video game development, and design.

Examples of RLI ProjectsCurrent [...]

---

Outline:

(00:29) CAIS and Scale AI release Remote Labor Index

(02:04) Bipartisan Coalition for Superintelligence Moratorium

(04:18) In Other News

(05:56) Discussion about this post

---

First published:

October 29th, 2025

Source:

https://newsletter.safe.ai/p/ai-safety-newsletter-65-measuring

---

Want more? Check out our ML Safety Newsletter for technical safety research.

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

72 episodes

Manage episode 516350511 series 3647399

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

In this edition: A new benchmark measures AI automation; 50,000 people, including top AI scientists, sign an open letter calling for a superintelligence moratorium.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

CAIS and Scale AI release Remote Labor Index

The Center for AI Safety (CAIS) and Scale AI have released the Remote Labor Index (RLI), which tests whether AIs can automate a wide array of real computer work projects. RLI is intended to inform policy, AI research, and businesses about the effects of automation as AI continues to advance.

RLI is the first benchmark of its kind. Previous AI benchmarks measure AIs on their intelligence and their abilities on isolated and specialized tasks, such as basic web browsing or coding. While these benchmarks measure useful capabilities, they don’t measure how AIs can affect the economy. RLI is the first benchmark to collect computer-based work projects from the real economy, containing work from many different professions, such as architecture, product design, video game development, and design.

Examples of RLI ProjectsCurrent [...]

---

Outline:

(00:29) CAIS and Scale AI release Remote Labor Index

(02:04) Bipartisan Coalition for Superintelligence Moratorium

(04:18) In Other News

(05:56) Discussion about this post

---

First published:

October 29th, 2025

Source:

https://newsletter.safe.ai/p/ai-safety-newsletter-65-measuring

---

Want more? Check out our ML Safety Newsletter for technical safety research.

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

72 episodes

All episodes

×Welcome to Player FM!

Player FM is scanning the web for high-quality podcasts for you to enjoy right now. It's the best podcast app and works on Android, iPhone, and the web. Signup to sync subscriptions across devices.